- Editing your feelings – writing to your brain

In a recent 1News interview, Gabe Newell, the co-founder of game development company Valve, described how new consumer-grade brain-computer interfaces will soon be mainstream and that they by now should be in every game developer’s toolbox. Because of movements such as OpenBCI, Brain-computer interfaces (BCI’s) are now available at relatively low costs for developers and researchers across the globe.

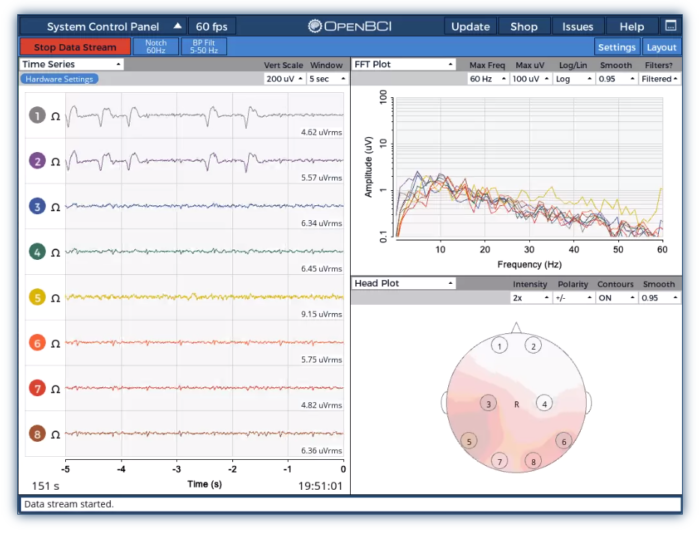

OpenBCI’s user interface. OpenBC.com. CC 3.0 license

OpenBCI’s wearable cap. OpenBCI.com. CC 3.0 license. The DIY approach to brain-computer interfaces grew out of the biohacking movement and there is a strong community that develops open-source hardware and software that is available for anyone to use and to build their own applications from.

This could in theory allow for the following future service applications:

- Edit your personality

- Edit your mood

- Improve your motivation on-demand

- Run sleep as an app

- Let other people program your emotions

The article sparked a few thoughts on the persuasive qualities of BCI’s and how people could use or misuse them going forward.

Digital feedback loops as tools to persuade more effectivelyIntentionally writing information to people’s brains is not new. However, the tools available for people to do so are continuously evolving. Computer tools designed to influence people’s behaviours or attitudes are studied broadly in the field of design for behaviour change and more specifically in fields such as persuasive technologies.

‘Steering’ people’s behaviour is not a new idea either. In ‘The human use of human beings’, first published in 1950, Norbert Wiener described information feedback loops between people and machines and predicted that this would happen:

“society can only be understood through a study of the messages and the communication facilities which belong to it; and that in the future development of these messages and communication facilities, messages between man and machines, between machines and man, and between machine and machine, are destined to play an ever-increasing part.” he wrote (Wiener 1954, p. 16).Wiener was instrumental in establishing cybernetics as an area of inquiry and its theories became essential building blocks for the field of artificial intelligence. Cybernetics is a system’s approach that considers people and machines as goal-oriented systems, that continuously ‘steer’ and adjust their course to pursue their endogenous ‘goals’. Among many other things, is has been referred to as the ‘science of steering systems’, and it can be applied to all forms of systems; technological, biological, cultural or political.

Artificial intelligence technologies are also common ‘engines’ for persuasive systems and there is an interesting territory in the intersection between persuasive systems and cybernetics which is largely unexplored.

BCI’s clearly create tight feedback loops between human brains and digital ‘brains’. That could imply that a cybernetic approach may be useful to understand how people’s agency is empowered or diminished by interfacing its ‘software’ with computers through a BCI.

Advantages of computer persuaders

The properties of computer-to-human persuasion are quite different from interpersonal persuasion and according to Fogg (2002) some advantages of a computer persuader include:

- It never gets tired and can persuade 24/7

- It is difficult to know the source of digital persuasion

- It can manage and process a huge volume of data

- It can use different modalities to influence

- It can scale easily

- It can go where humans cannot go or are not welcome

We see already how these advantages play out in social media or online advertising, where feedback loops are used to create learning systems that strive to understand how ‘users’ act in order to influence their behaviours and actions. ‘Learning’ feedback loops are at the heart of artificial intelligence and machine learning, that underpin many online services that personalise content to the individual user based on their behaviour. Nir Eyal’s Hooked-model, for example, describes well how this digital persuasion process works.

Clearly, BCI’s offer an unparalleled opportunity to build feedback systems that are even more closely integrated with the human psyche. By extracting data from people’s holiest inner sanctuary, directly from the brain, it is possible to create even more accurate feedback loops and serve even more engaging experiences and eventually ‘steer’ people’s behaviour better.

BCI’s offers new tools for creating human-computer feedback loops which were previously not possible. They allow for more powerful real-time influence of people’s behaviours and feelings.

Imagine that you could ‘see’ your feelings in real-time. Imagine also that you could program an app to monitor your feelings and ‘respond’ to them in order to achieve a goal that you have programmed it to do.

For example, you could program the computer to keep you happy short term and if you somehow deviate from that trajectory and its sensor picks up ‘sad’ signals, it immediately serves you content that it knows makes you happy. It can measure your response to the ‘therapy’ in real-time and keep adjusting your feelings until they reach the desired state.

So in this theoretical scenario, you can essentially use computer software to ‘steer’ your feelings in desired directions.

However, similarly, someone else can use the technology to steer your feeling in a direction they desire. Newell suggests that cognitive malware may become an issue and that your mind many be hijacked to an even greater extent by ill-intended others. So that you could, for example, get stuck in an undesirable feedback-loop that entices you to feel and do things which you would otherwise not do. (Brainwashing anyone?)

One-off tests and experiments with BCI’s are probably not going to be problematic for the users (hopefully). However, tighter brain-computer feedback loops carry quite some risk for severe consequences, intended or not, if deployed at scale. It is not difficult to imagine that the documented negative effects of social media: echo-chambers, polarisation, ‘addiction’ and dark patterns would also move into brain-computer software applications.

There is also a clear and present risk for reductionism and that any approach fails to capture the complexities and ambiguities of human feelings, thus ‘steering’ people in the wrong direction or reducing their experiences to a reduced set of standard feelings.

A few more reflections:

- When you outsource your cognitive agency to computers, you also rely on their intent to be beneficial to you. I.e. you usually don’t want to use a digital service which wants to exploit, coerce or deceive you.

- It is not always clear what a service’s intent is. So most of the time, you don’t know whether it is ‘on your side’ or not. If you are using an app to modify your feelings and letting it use data from your brain, you better be sure to know what is under the hood.

- But how can we know the intent of services? Unless you have programmed every line of code yourself, you will have to rely on secondary information and trust the intent of those who design or manage the software which you use to program yourself. Trust in the technology providers becomes paramount.

- But what if that trust can also be manipulated? So that you believe that all is good and you actually feel good, but in fact, you are being manipulated to think so by whoever commissions your brain-programming software?

- And if so, is your feeling authentic?

- If not, what does a world of ‘simulations and simulacra‘ (Baudrillard) mean for the human experience?

References:

Fogg, B. J. (2002). Persuasive technology: using computers to change what we think and do. Ubiquity, 2002(December), 2.Norbert, W. (1954). Human Use of Human Beings. Doubleday & Company.

- Understanding GPT-3 as a Persuasive Technology

This is a short note on the persuasive potential of the GPT-3 algorithm recently released by OpenAI and the subsequent demos produced by the designers and coders who have laid their hands on an API already.

If you are not yet convinced about the game-changing nature of GPT-3 natural language algorithm, please read this article (in full). Without spoiling the surprise at the end of the article, we can reflect on the fact that text-generating capabilities of AI are much more powerful now than ever before and that the implications of high-fidelity text generation and more advanced machine imagination, will fundamentally transform how we think about authenticity, authorship and original thought.

As digital media and content are already being weaponized and used extensively for political ends, it ought to be discussed closer in the field of persuasive technologies – computer products and services designed with the intent to influence people’s behaviour and attitudes. People already spend a growing share of their lives through a ‘smart’ layer of technology: devices and software which subtly nudges our thoughts and actions towards goals, which may or may not be in line with our personal desires. When we outsource cognitive and physical processes to technology, we place part of our agency in the hands of the material environment. That also means that we must put our faith in that the services are on our side.

To be able to conceive what new forms of services that GPT-3 and similar algorithms may lead to, we can speculate a bit based on some of the demos that have been published recently:

- AI poetry, computer-generated imagination and provocation

- AI role-playing, computer programs driving persuasive dialogues

- AI-generated computer code, based on text input

These are but a few examples of what the GPT-3 algorithm is capable of today, in a few experimental service interfaces which have been released in public. There are also examples of weird texts generated by GPT-3, which represent some of the flaws that still remain with natural language processing. Some of the demos may not seem that impressive yet but extrapolating its potential a few years in future, the effects may be profound.

In academia, texts are the fundamental vehicle for research. Research is shared in textual form, it is scrutinised and shared in text. Original thought and authorship are cornerstones of the academic process but with the introduction of algorithms that can produce texts which is indistinguishable from texts being written by a human, it may soon be impossible to discern whether a work is man-made or machine-made.

In business, persuasive texts are used everywhere: in sales, marketing and on the strategic level, for example in investor’s pitches or reporting. Machine-generated dialogue software is already commonplace in customer support, as virtual assistants and chatbots are proliferating across industries. VA’s such as Alexa and Siri are already providing interfaces for search and shopping for millions of people. More persuasive texts mean more persuasive services, but it also increases the risk of coercion or deception.

For people in general, it will be even more difficult to navigate the information landscape and discern whether an actor is a human or machine. Perhaps we will place more value on authenticity and human interaction when machine content is abundantly available. Perhaps we need to develop services which are ‘on our side’, working to fend off invasive, persuasive algorithms in our environments, like more advanced spam-bots that protects our attention from predatory apps. We will also need to develop our sensitivity towards computer-generated content in any form or medium it may appear so that we are not led or mislead by hidden forces which we do not have the bandwidth to understand.

These are but a few ways in which society will be impacted by natural language processing technologies, as persuasive technologies. A longer post will follow soon, *perhaps* written by myself.

GPT-3 may not be a game-changer just yet, but that it has definitely opened people’s mind to a universe of possible use cases and an unexplored design space which is infinitely interesting.

Persuasive technology scholars should definitely be on their feet.

Other resources:

https://openai.com/blog/openai-api/

https://arxiv.org/abs/2005.14165by Gustav Borgefalk

gustav.borgefalk@network.rca.ac.uk